What are we monitoring?

There is no universally-accepted definition of hate speech in human rights law. We are adopting Article 19’s definition that hate speech is any expression of discrimination and advocacy of hatred towards individuals or groups on the basis of specific protected characteristics.

These expressions, opinions or ideas of hate may be written, non-verbal, visual, artistic or in some other form, and may be disseminated through any media, including the internet, print, radio, or television.

The project will monitor:

Levels of hate speech surrounding narratives and content on race, religion, royalty, gender and LGBTIQ, and refugees and migrants that are distributed by actors such as politicians, political parties, government agencies, media organisations, and key opinion leaders.

Coordinated Inauthentic Behaviour (CIB) like bots and cybertroopers.

Scope of Analysis

We have adopted the 3M framework[i].

3M stands for Messenger, Message and Messaging.

Messenger

[Subject / Actor / Source]

- Political parties

- Politicians

- Media

- Government agencies

- Key opinion leaders

Message

Key areas of focus:

- 3R – race, religion, royalty

- Gender and LGBTIQ

- Refugees and migrants

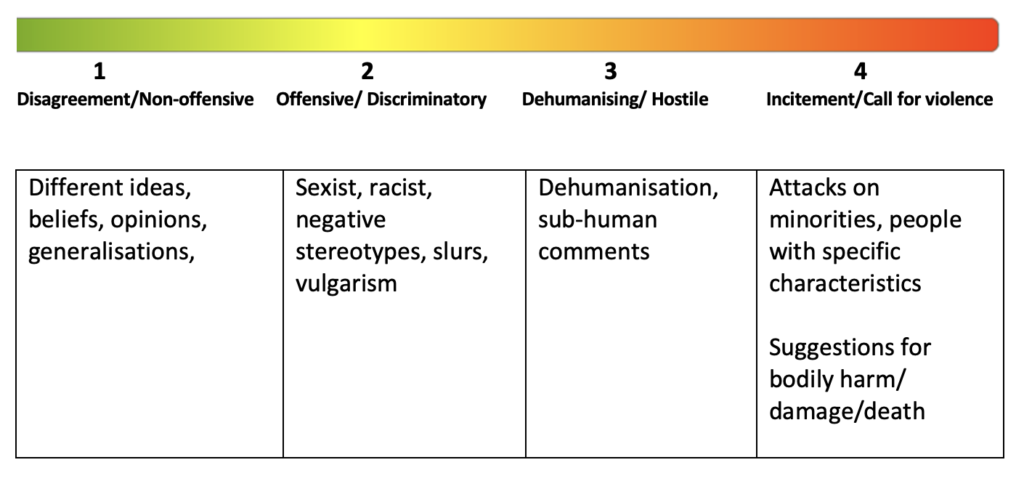

We have developed a severity scale in which we categorise the levels of hate speech as follows:

- Disagreement/ non-offensive

- Offensive / discriminatory

- Dehumanising /hostile

- Incitement/ call for action (violence)

Messaging

(platform for distribution of hate speech)

Platforms

- YouTube

- TikTok

- Possible bots

- Possible cybertroopers

[i] Monitoring of Media Coverage of Elections: Toolkit for civil society organisations, Council of Europe, November 2020, pp 33-34

Monitoring Tools:

We are using Zanroo, a customised tool, to scrape data on identified issues and themes, and identified accounts, using a combination of keywords and character embeddings.

The scraped dataset may contain anomalies, where posts are inaccurately flagged. Our human monitors review, categorise and tag the data according to keywords. They will also identify the severity scale of the hate speech and verify the authenticity of accounts on bot-checking sites.